Load Balancing

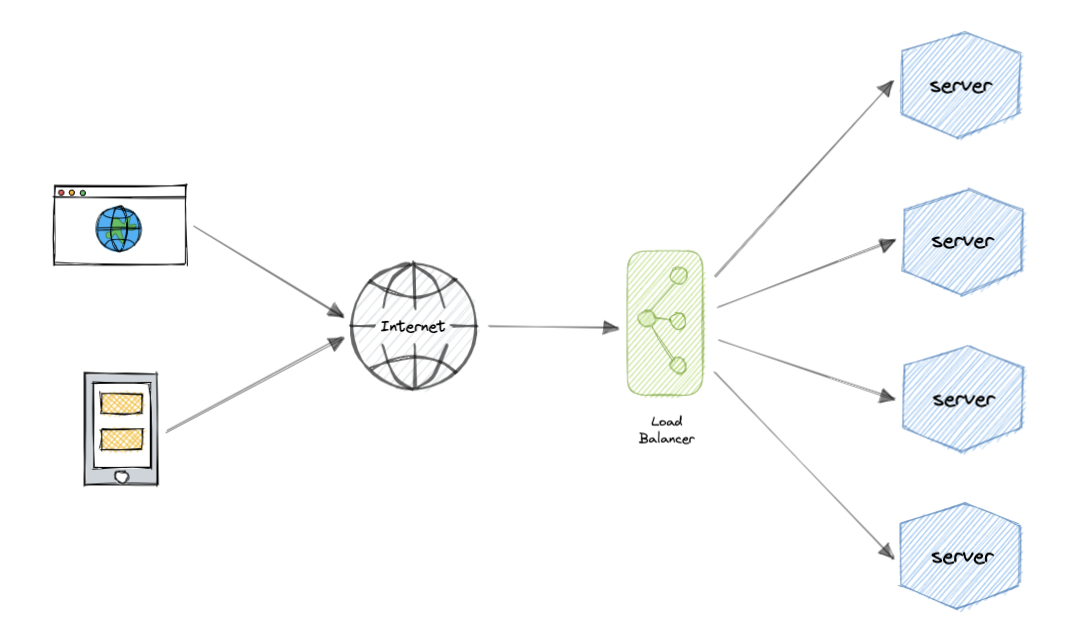

Load balancing refers to the process of distributing incoming network or application traffic across a group of backend servers. Its primary goal is to prevent any single server from becoming a bottleneck, thereby ensuring smooth operation and high availability.

The concept of taking actually n servers and trying to balance the load evenly on all of them is called load balancing.

This provides the flexibility to add or subtract resources as demand dictates.

The concept of consistent hashing help us do, to evenly distribute the weight across all servers.

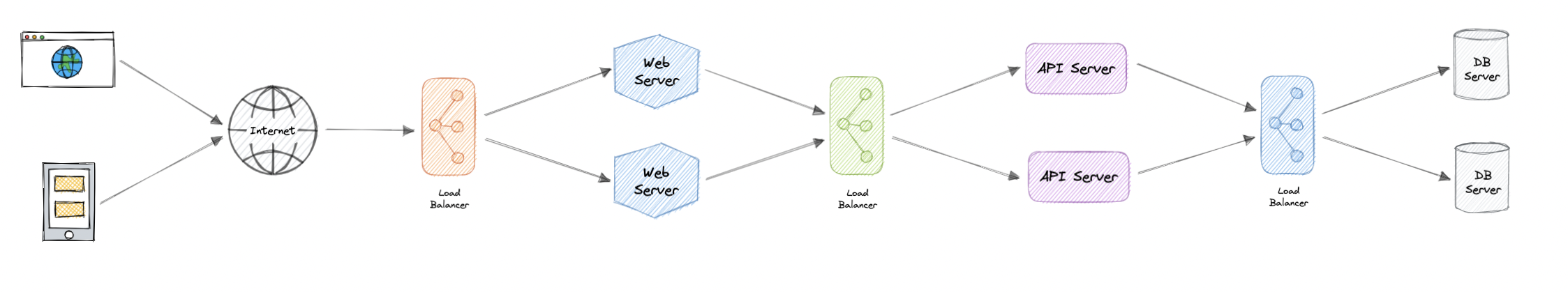

For additional scalability and redundancy, we can try to load balance at each layer of our system:

Modern high-traffic websites must serve hundreds of thousands, if not millions, of concurrent requests from users or clients. To cost-effectively scale to meet these high volumes, modern computing best practice generally requires adding more servers.

A load balancer can sit in front of the servers and route client requests across all servers capable of fulfilling those requests in a manner that maximizes speed and capacity utilization. This ensures that no single server is overworked, which could degrade performance. If a single server goes down, the load balancer redirects traffic to the remaining online servers. When a new server is added to the server group, the load balancer automatically starts sending requests to it.

Common Load Balancing Techniques

- Round Robin: Distributes requests sequentially across available servers, ensuring each server gets an equal share of the workload.

- Weighted Round Robin: Similar to round robin, but assigns a weight to each server based on its capacity. Servers with higher capacity receive more requests.

- Least Connections: Directs traffic to the server with the fewest active connections, which can be useful in environments where requests vary significantly in resource consumption.

- IP Hashing: Uses the client’s IP address to determine which server receives the request, which can help with session persistence.

- Dynamic Load Balancing: Monitors server performance in real-time and directs traffic based on current load conditions.

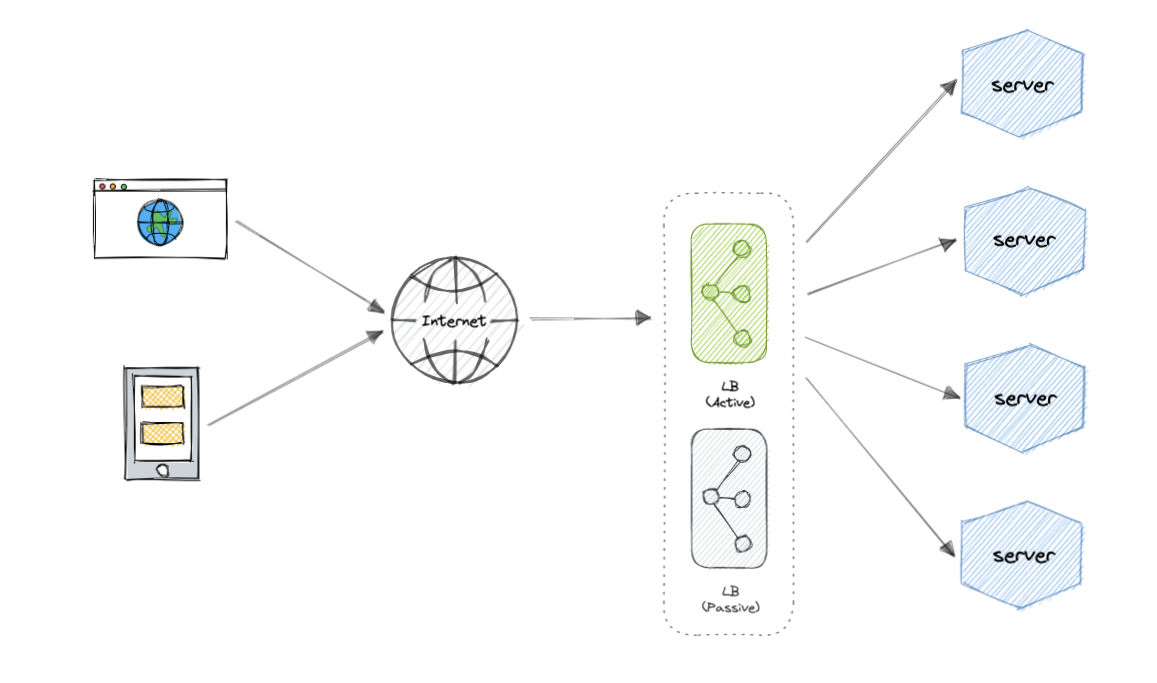

Redundant Load Balancers

The load balancer itself can be a single point of failure. To overcome this, a second or N number of load balancers can be used in a cluster mode.

If there's failure detected and the active load balancer fails, another passive load balancer can take over which will make our system more fault-tolerant.

How a system with load balancer works?

A system with a load balancer works by acting as an intermediary between client requests and a pool of backend servers. Here's a step-by-step breakdown of the process:

- A user device sends a request to a DNS server.

- The DNS server translates the domain name into an IP address.

- The device receives an IP address corresponding to the load balancer—not directly to the web server—ensuring that end users only interact with the load-balancer layer.

- The user device then establishes an HTTP(S) connection with the load balancer using the provided IP address.

- Upon receiving the request, the load balancer serves as the gateway for the traffic. It uses a load-balancing algorithm—taking into account factors such as server availability and current load—to determine which web server should handle the request.

- The load balancer forwards the request to the chosen web server using private IP addresses that are only accessible within the internal network.

- The selected web server processes the request and generates a response.

- The web server sends its response back to the load balancer.

- Finally, the load balancer relays the response to the user device, which treats it as if it came directly from the intended web server.

Where do we add a load balancer?

- Between Clients and Frontend Web Servers:

The load balancer serves as the initial touchpoint for incoming client requests. It receives traffic directly from clients and distributes it among the available frontend web servers. - Between Frontend Web Servers and Backend Application Servers:

In architectures featuring multiple frontend servers, a load balancer directs traffic from these servers to the appropriate backend application servers, ensuring an even workload distribution. - Between Backend Application Servers and Cache Servers:

The load balancer routes requests from application servers to cache servers, which store frequently accessed data in memory to speed up response times. - Between Cache Servers and Database Servers:

When there are several cache servers in use, a load balancer helps distribute requests from the caches to the database servers, preventing any single database from being overwhelmed with traffic.

Leave a comment

Your email address will not be published. Required fields are marked *