Memory Protection

Memory protection is a critical mechanism in modern operating systems and CPUs that prevents processes from accessing memory they do not have permission to use. It ensures that a program can only access its own allocated memory and cannot interfere with the memory of other processes or the operating system itself. This protects the system from bugs, misbehaving programs, and malicious activities, enhancing both security and stability.

Our media player should not interfere with the text editor, which is running parallelly. To achieve this OS utilize hardware functionality to ensure that memory areas of one process are not accessible by other processes. There are different approaches depending on the hardware and the OS implementation.

For example, some ARM Cortex-M processors, commonly used in embedded systems, include a Memory Protection Unit (MPU), which allows the definition of a limited number (e.g., 8) of memory regions, each with configurable access permissions (such as no access, read-only, or read-write). On every memory access, the MPU checks if the address falls within one of the defined regions and whether the requested access complies with the region’s permissions. If the access violates these permissions, the MPU triggers an exception.

The operating system can update the MPU’s regions and access permissions during a context switch between processes. This ensures that each process is confined to its own memory space, preventing one process from accessing or modifying another process's memory, thus enforcing process isolation and improving system security.

On x86, the hardware supports two different approaches to memory protection: Segmentation and Paging.

Segmentation

Segmentation is a memory management technique used by operating systems to divide a program's logical address space into segments, which can vary in size. Each segment represents a logical unit of the program, such as functions, data structures, or the stack, making it easier to manage and protect memory.Segmentation was first introduced in 1978 to expand the amount of addressable memory. At the time, CPUs used 16-bit addresses, limiting addressable memory to 64 KiB. To overcome this limitation, segment registers were introduced, each holding an offset address. The CPU would automatically add this offset during memory access, enabling access to up to 1 MiB of memory.

The CPU selects the appropriate segment register automatically based on the type of memory access. For instruction fetching, it uses the code segment (CS); for stack operations like push and pop, the stack segment (SS) is used. Other instructions rely on the data segment (DS) or the extra segment (ES). Later, two additional segment registers, FS and GS, were introduced for general-purpose use.

In the original version of segmentation, the segment registers held only the offset, with no access control. This changed with the advent of protected mode. In protected mode, the segment descriptors contain an index that points to a local descriptor table (LDT) or global descriptor table (GDT). These tables store not only the segment offset but also the segment size and access permissions. By loading different descriptor tables for each process, the operating system can restrict memory access to specific areas, effectively isolating processes from one another.

Segmentation, by modifying memory addresses before access, laid the groundwork for a key modern concept: virtual memory.

What is Virtual Memory?

Virtual memory is a memory management technique that creates an illusion of a large, contiguous block of memory for each process, irrespective of the actual physical memory available in the system. It enables the execution of processes that require more memory than what is physically installed on the machine, by utilizing disk space as an extension of RAM.

Working:

Instead of directly accessing the storage device, a translation step is performed first.

For segmentation, the translation step is to add the offset address of the active segment.

Imagine a program accessing memory address 0x1234000 in a segment with an offset of 0x1111000: The address that is really accessed is 0x2345000.

To differentiate the two address types, addresses before the translation are called virtual, and the address after the translation are called physical.

One important difference between these two kinds of addresses is that physical addresses are unique and always refer to the same distinct memory location. Virtual addresses, on the other hand, depend on the translation function. It is entirely possible that two different virtual addresses refer to the same physical address. Also, identical virtual addresses can refer to different physical addresses when they use different translation functions.

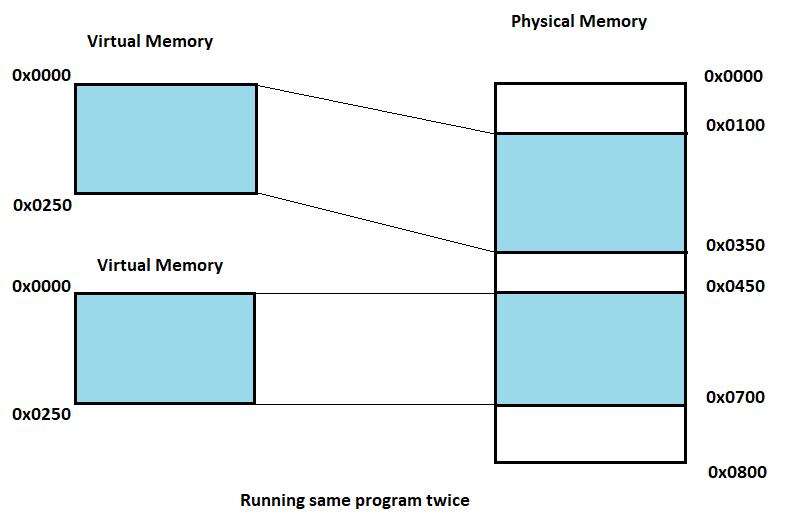

In this example using virtual memory the same program is run twice in parallel.

The first instance has the segment offset 0x0100, so that its virtual addresses 0x0000 - 0x0250 are translated to the physical addresses 0x0100 - 0x0350. The second instance has an offset of 0x0450, which translates its virtual address 0x0000 - 0x0250 to physical addresses 0x0450 - 0x0700. This allows both programs to run the same code and use the same virtual address without interfering with each other.

Now suppose we want to run the third instance of the program to virtual memory without overlapping, even though there is more than enough memory available. 0x0000 - 0x0100, 0x0350 - 0x0450 and 0x0700 - 0x0800 which makes total of 0x0300 amount of memory and it is more than 0x0250 required by the another instance of the process. However the problem is that these are not continuous. This problem is know as fragmentation, (external fragmentation specially). One way to combat this fragmentation is to pause execution, move the used parts closer together, and free parts to the end of the memory. This way we will get free regions to the end of the memory and there would be enough continuous space to start the third instance of the program.

The disadvantage of this process is that it needs to copy large amounts of memory, which decreases performance. This makes performance unpredictable since programs are paused at random times and might become unresponsive.

The External Fragmentation is one of the reasons that Segmentation is no longer used by most systems. In fact, segmentation is not even supported in 64-bit mode on x86 anymore. Instead, paging is used, which completely avoids the fragmentation problem.

Why do we need virtual memory

🅰 Large Process:

- To execute a process, its data must be loaded into main memory (RAM), which can be challenging if the process is large.

- Virtual memory offers an idealized abstraction of physical memory, creating the illusion of a larger virtual memory space than what is physically available.

- It combines active RAM with inactive memory on disk to provide a wide range of contiguous virtual addresses. Implementations of virtual memory typically require hardware support, often in the form of a memory management unit integrated into the CPU.

🅱 Multiprocessing:

The most beneficial of this property is running the same program twice in parallel.

In a compiled binary, each function is assigned a specific, fixed address in memory, and the assembly instructions that call these functions have those addresses hardcoded. Without virtual memory, it would be impossible to run two programs simultaneously because they could potentially require different functions to occupy the same physical address in memory.

Key Concepts of Virtual Memory in x86:

- Virtual Address Space:

- Each process is given its own virtual address space, which may be larger than the available physical memory.

- The virtual address space is divided into pages (typically 4 KB in size for x86 systems).

- The processor uses virtual addresses generated by programs, which are mapped to physical addresses via a translation mechanism.

- Paging:

- The x86 architecture uses paging as the primary mechanism for implementing virtual memory.

- Memory is divided into pages (fixed-size blocks, typically 4 KB).

- The operating system maintains a page table that maps virtual pages to physical page frames in memory.

- Each process has its own page table.

- Page Table Structure in x86:

- The x86 architecture uses a multi-level page table (e.g., two-level or more depending on the mode).

- This helps manage large address spaces without requiring massive single-level tables.

- Translation Lookaside Buffer (TLB):

- The TLB is a hardware cache that stores recent virtual-to-physical address mappings.

- It speeds up address translation by avoiding the need to look up the page table every time an address is accessed.

- Page Faults:

- When a program tries to access a virtual page that is not currently mapped to physical memory (i.e., not in the TLB or the page table), a page fault occurs.

- The operating system handles this by:

- Locating the page in secondary storage (e.g., on the hard disk or SSD).

- Loading the required page into physical memory (if needed, by evicting another page).

- Updating the page table and the TLB to reflect the new mapping.

Paging

Paging provides an alternative method for accessing physical memory. Instead of dividing memory into segments, it is split into pages and frames. A frame is a block of physical memory with a fixed size (commonly 4 KB), while a page is a corresponding block of virtual memory of the same size. The key feature is that pages and frames are identical in size. In a system using paging, programs do not interact with memory through physical addresses. Instead, they use virtual addresses, which the CPU translates into physical addresses. This translation process is complex and slower compared to direct memory access. To improve efficiency, most CPUs include a dedicated hardware component called the Memory Management Unit (MMU), which assists in managing this translation.

The idea is to divide both the virtual and physical memory space into small, fixed-size blocks.

The blocks of the virtual memory is called pages, and the blocks of the physical address space are called frames.

Each page can be individually mapped to a frame, which makes it possible to split larger memory regions across non-continuous physical frames.

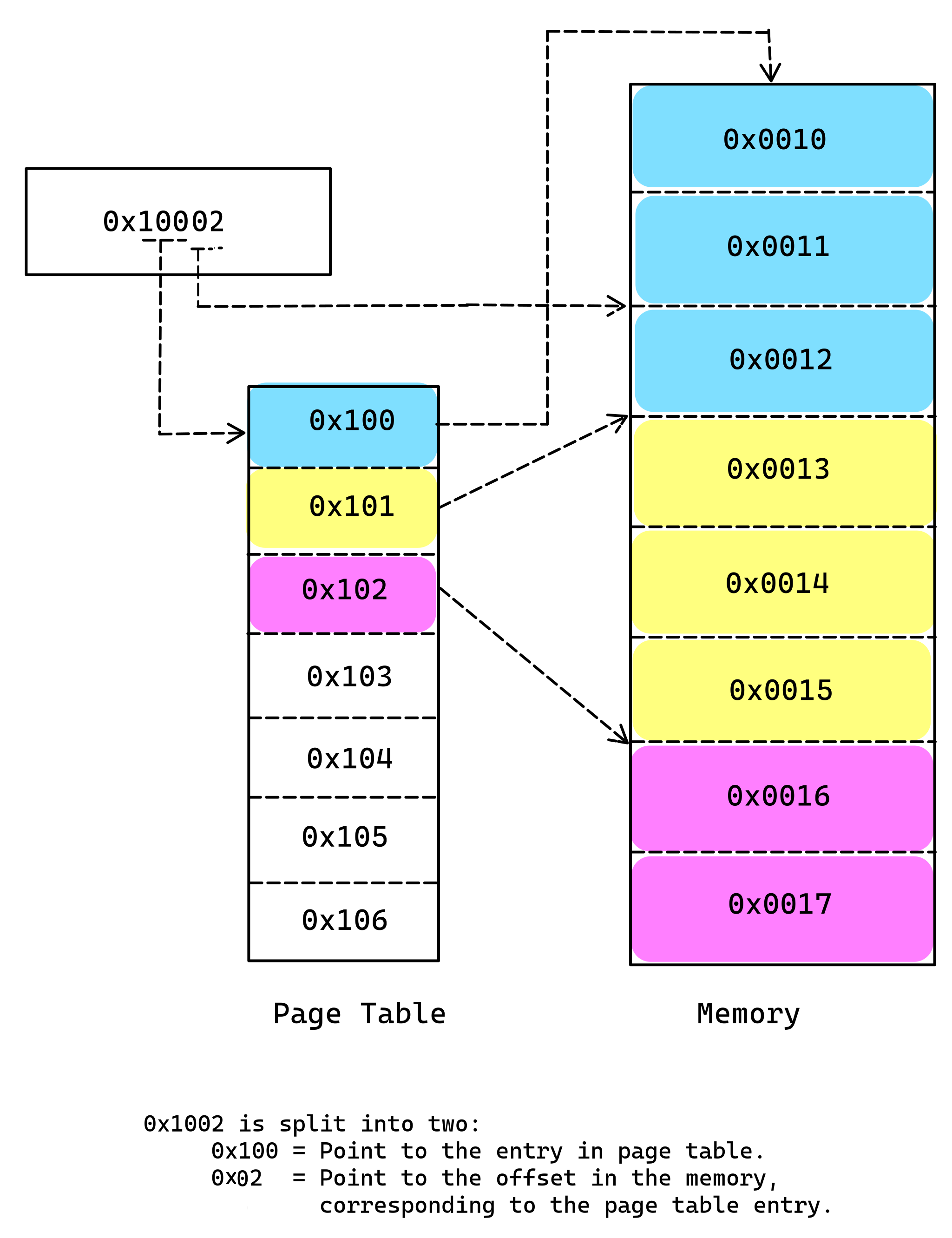

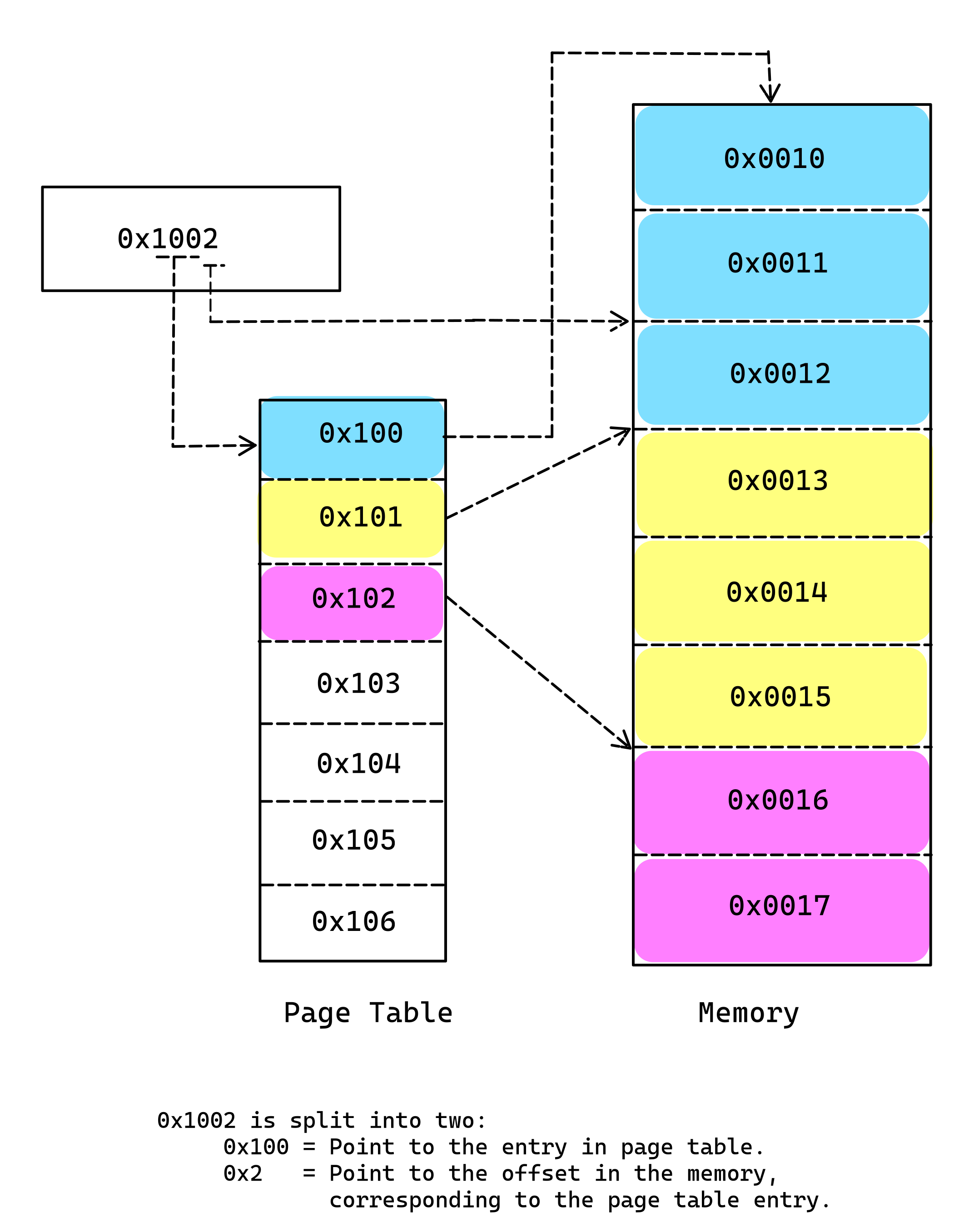

The image given below illustrates memory translation process. It is a very simplified example. It illustrates the high level concept of the paging.

In the image above the virtual address is 0x1002. The CPU divides the address into several parts in this case into two, 0x100 and 0x2. The CPU uses the first part to look up in the Page Table, which will provide an entry to the physical memory. In our case physical address associated with page table entry 0x100 is 0x10. CPU then takes the second part 0x2 and adds the last part of the address to the physical address associated with the table entry.

0x0010 + 0x02 = 0x0012Thus the CPU translates the virtual address 0x10002 into the 0x0012.

This approach can be extended to multi-level page tables.

Paging is handled directly by the CPU hardware. While it could theoretically be implemented in software, this would be far too slow, as every single access to RAM would require paging, introducing significant delays. By having the CPU manage paging at the hardware level, the process is much faster and more efficient.

Operating systems are responsible for setting up and controlling paging by communicating with the CPU hardware. This is mainly done through:

- The CR3 register, which tells the CPU where the page table is located in RAM.

- Writing the correct paging data structures to the RAM, which is pointed to by the CR3 register.

Using RAM-based data structures is common when large amounts of data need to be communicated to the CPU, as storing such data in CPU registers would be impractical due to size limitations. The format of these data structures is defined by the hardware, but it is the OS’s responsibility to properly set up and manage them in RAM and to inform the CPU where to find them (via CR3). To ensure efficient memory access, heavy caching mechanisms are employed, most notably the Translation Lookaside Buffer (TLB), which helps speed up address translations.

The OS enforces strict control over the paging system, preventing programs from directly modifying the paging setup. This is achieved by:

- Restricting modification of the CR3 register in ring 3 (user mode). Only the OS, running in ring 0 (kernel mode), has permission to modify CR3.

- Making the page table structures themselves inaccessible to the process using paging.

Paging in 32-bit x86 Architecture:

In modern computer systems, especially in the x86 architecture, paging is used to map virtual addresses to physical addresses efficiently. To handle large memory spaces and optimize memory management, paging systems are organized into multiple levels of page tables. Each level of paging introduces an additional layer of indirection, allowing the system to handle larger address spaces and reduce the memory overhead of page tables.

1️⃣ Single-Level Paging (Direct Paging)

Single-level paging is the simplest form of paging, where a single page table is used to map virtual addresses to physical addresses.

Structure: A single page table is used to map virtual page numbers to physical page frames.

Virtual Address Structure (32-bit)

In x86, a 32-bit virtual address is divided into three parts when using single-level paging:

- Page Directory Index (10 bits)

- Page Table Index (10 bits)

- Page Offset (12 bits)

Let's represent the virtual address as:

|Page Directory Index|Page Table Index|Page Offset |

| 10 bits | 10 bits | 12 bits |

Example:

0xCAFEBABE = 11001010111111101011101010111110 (binary)

| 1100101011 | 1111111010 | 101110101111 |

(Page Dir) (Page Table) (Page Offset)

- Page Directory Index (

1100101011): First 10 bits, this gives us0x32B. - Page Table Index (

1111111010): Next 10 bits, this gives us0x3FA. - Page Offset (

101110101111): Last 12 bits, this gives us0xBAF.

So, our virtual address 0xCAFEBABE breaks down into:

- Page Directory Index:

0x32B - Page Table Index:

0x3FA - Page Offset:

0xBAF

Page Directory Lookup:

Next, the CPU uses the Page Directory Index to look up the entry in the Page Directory.

The

CR3 registerholds the base address of thePage Directory. Let's assume it is0x00100000.CR3 → Page Directory @ 0x00100000The Page Directory is an array of 1,024 entries. Each entry is 4 bytes (32 bits) and points to a Page Table in memory. Using our Page Directory Index

0x32B, we calculate the offset:Page Directory Entry = 0x00100000 + (0x32B * 4) = 0x00100000 + 0xCAC = 0x00100CACSo, the Page Directory Entry for our virtual address is located at 0x00100CAC. Let’s assume the entry contains a pointer to Page Table at 0x00200000.

Page Table Lookup:

Now, the CPU needs to look up the Page Table Entry using the Page Table Index (0x3FA).

- The Page Table is an array of 1,024 entries, each pointing to a physical frame. Each entry is also 4 bytes.

The Page Table we are concerned with starts at 0x00200000. Using our Page Table Index

0x3FA, we calculate the address of the Page Table Entry:Page Table Entry = 0x00200000 + (0x3FA * 4) = 0x00200000 + 0x0FE8 = 0x00200FE8So, the Page Table Entry for our virtual address is located at 0x00200FE8. Let’s assume the entry contains a pointer to Physical Frame at 0xA000.

Physical Frame and Page Offset:

Finally, the CPU uses the Page Offset (0xBAF) to calculate the physical address within the physical frame.

The Page Offset is added to the base of the Physical Frame to determine the exact physical memory location.

Physical Address = Physical Frame + Page Offset = 0xA000 + 0xBAF = 0xA0BAFSo, the physical address corresponding to our virtual address

0xCAFEBABEis 0xA0BAF.

2️⃣ Two-Level Paging (32-bit x86 Paging)

In the 32-bit x86 architecture, virtual addresses are mapped to physical addresses using a two-level page table hierarchy. The virtual address is divided into three parts:

- Page Directory Index

- Page Table Index

- Offset within the page

3️⃣ Three-Level Paging (PAE Paging in x86)

In Physical Address Extension (PAE) mode, the x86 architecture extends the physical address space from 32-bit (4 GB) to 36-bit (64 GB). This introduces an additional level of paging: the Page Directory Pointer Table (PDPT), creating a three-level hierarchy.

4️⃣ Four-Level Paging (x86-64 Paging)

In the x86-64 architecture (used in 64-bit systems), a four-level paging scheme is used to map virtual addresses to physical addresses. This is necessary because of the much larger virtual address space in 64-bit systems.

5️⃣ Five-Level Paging (Extended x86-64 Paging)

Starting with Intel’s 5-level paging introduced with Ice Lake processors (supporting 5-level paging), five levels of page tables can be used to handle even larger address spaces.

Terms used in Paging

1 Page

A fixed-size block of virtual memory. The size of a page is typically 4 KB in most systems, though it can vary (e.g., 2 MB or 1 GB for large pages). Pages are used to divide the virtual address space.

2 Frame

A fixed-size block of physical memory that is the same size as a page. A frame is a physical storage unit that can hold a page from virtual memory. Each page in virtual memory is loaded into a corresponding frame in physical memory.

3 Page Table

A data structure used to store the mappings between virtual pages and physical frames. It’s maintained by the operating system and is essential for the process of translating virtual addresses into physical addresses.

4 Page Directory

In some architectures (like x86), the page directory holds pointers to page tables. It is a higher-level table used to locate the page tables. The CR3 register holds the base address of the page directory.

5 Page Directory Entry (PDE)

An entry in the Page Directory that points to a Page Table. Each entry contains flags like presence, read/write permission, and page-level attributes.

6 Page Table Entry (PTE)

An entry in the Page Table that maps a virtual page to a physical frame. It contains the physical frame number (PFN) and control flags such as whether the page is present in memory, permissions (read/write), and whether the page is dirty or accessed.

7 Virtual Address

The address used by a program to access memory. It is not the actual physical memory address but is translated by the paging mechanism into a physical address using page tables.

8 Physical Address

The actual memory address in the physical RAM. After virtual address translation, the CPU accesses the physical memory using this physical address.

9 Translation Lookaside Buffer (TLB)

A special cache in the CPU that stores recent translations from virtual addresses to physical addresses. The TLB helps speed up the paging process by reducing the need to repeatedly consult the page table.

10 Page Fault

An exception that occurs when a program tries to access a page that is not currently present in physical memory. The operating system handles this by either loading the required page from disk or terminating the process if the access is invalid.

11 CR3 Register (Page Directory Base Register)

A control register in x86 architectures that holds the base address of the Page Directory. The operating system loads the address of the page directory into this register when switching between processes.

12 Present Bit

A flag in a Page Table Entry (PTE) that indicates whether a page is currently present in physical memory. If the page is not present, accessing it causes a page fault.

13 Page Offset

The lower bits of a virtual address that specify the exact location within a page. These bits are added to the base address of the physical frame to get the final physical address.

14 Demand Paging

A memory management scheme where pages are loaded into memory only when they are needed, i.e., when a page fault occurs. This allows the system to use memory more efficiently, loading pages on-demand.

15 Swap Space

A reserved area on the disk used as an extension of RAM when physical memory runs out. Pages not actively in use may be swapped out to disk, and swapped back into memory when needed.

16 Page Replacement Algorithm

A method used by the operating system to decide which page to swap out of memory when a new page needs to be loaded into RAM, and there is no free frame available. Common algorithms include LRU (Least Recently Used), FIFO (First In, First Out), and Clock.

17 Segmentation

An alternative memory management scheme (often combined with paging) that divides memory into segments of variable sizes, each representing a logical portion of a program (e.g., code, data). In modern systems, paging has largely replaced segmentation.

18 Multilevel Paging

A paging scheme that uses multiple levels of page tables (e.g., 2-level or 3-level paging) to manage large virtual address spaces efficiently. Each level of the page table breaks down the virtual address further, reducing the size of the page tables stored in memory.

19 Memory Management Unit (MMU)

A hardware component in the CPU responsible for handling the translation of virtual addresses to physical addresses. The MMU uses the page tables and TLB to speed up address translation.

20 TLB Miss

Occurs when the required virtual-to-physical address mapping is not found in the TLB. In this case, the system must walk the page tables to find the mapping, which is slower than a TLB hit.

21 Protection Bits

Flags in the Page Table Entries (PTE) that define the access permissions of a page. These bits control whether a page can be read, written, or executed by the process.

22 Kernel Space vs. User Space

- Kernel Space: A reserved part of memory that can only be accessed by the operating system (kernel).

- User Space: The portion of memory where user programs run. Paging helps enforce protection between these spaces to prevent unauthorized access to kernel memory.

References

https://os.phil-opp.com/paging-introduction/

https://cs4118.github.io/www/2023-1/lect/18-x86-paging.html

https://cirosantilli.com/x86-paging

https://hackernoon.com/segmentation-and-paging-in-x86-xts3yp1

Leave a comment

Your email address will not be published. Required fields are marked *