Caching is a fundamental concept in modern systems, designed to enhance performance, reduce latency, and optimize resource utilization but they introduce complexity around correctness, consistency.

Why caches matter

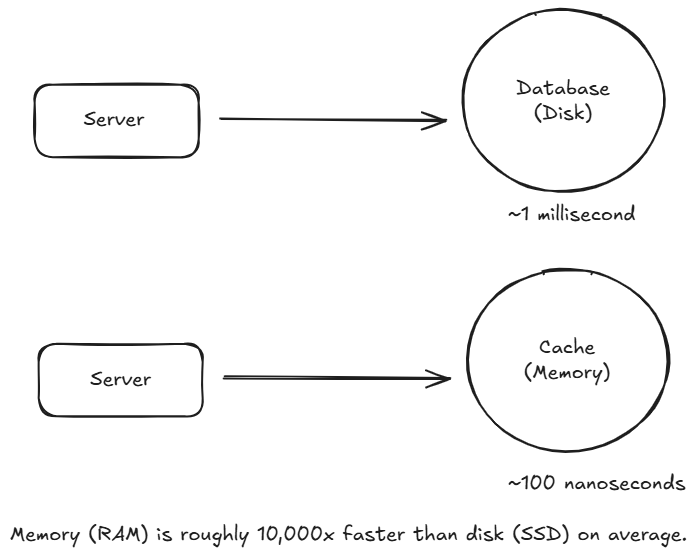

- Latency reduction: Serve data from memory or nearby edge nodes rather than slow disks or remote services.

- Throughput improvement: Reduce backend CPU/IO load so systems sustain higher QPS.

- Cost savings: Fewer database reads/writes and less compute on origin service.

- Availability/Resilience: Caches can act as a buffer when backends are degraded or during spikes.

But caching also introduces staleness, invalidation complexity, and additional failures modes. Good caching is about balancing performance with correctness.

What is Caching?

Caching is the process of storing copies of data or computational results in a temporary storage area—known as a cache—so that future requests for that data can be served faster. Instead of repeatedly fetching data from a slower primary data source, such as a database or an external API, systems use caches to quickly retrieve frequently accessed information.

What is Cache?

A cache is a temporary storage area that stores the result of expensive operation or frequently accessed data in memory so that subsequent requests are served more quickly.

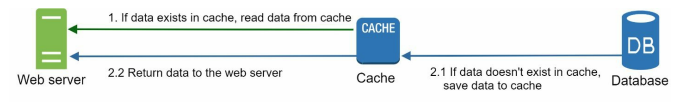

How it Works?

After receiving the request, a web server first checks if the cache has the available response. It it has, it sends data back to the client. If not, it queries the database, stores the response in cache, and sends it back to the client. This cache strategy is called a read-through cache. Other caching strategies are available depending on the data type, size, and access patterns.

Interacting with cache servers is simple because most cache servers provide APIs for common programming languages. The following code snippet shows typical Memcached APIs:

SECONDS = 1

cache.set('myKey', 'its value', 3600 * SECONDS)

cache.get('myKey')Considerations for using cache:

- Decide when to use cache. Consider using cache when data is read frequently but modified infrequently. Since cached data is stored in volatile memory, a cache server is not ideal for persisting data. For instance, if a cache server restarts, all the data in memory is lost. Thus, important data should be saved in persistent data stores.

- Expiration Policy: It is a good practice to implement an expiration policy. Once cached data is expired, it is removed from the cache. When there is no expiration policy, cached data will be stored in the memory permanently. It is advised not to make the expiration data too short as this will cause the system to reload data from the database too frequently. Meanwhile, it is advisable not to make the expiration data too long as the data can become stale.

- Consistency: This involves keeping the data store and the cache in sync. Inconsistency can happen because data-modifying operations on the data store and cache are not in a single transaction. When scaling across multiple regions, maintaining consistency between the data store and cache is challenging.

- Mitigating failures: A single cache server represents a potential single point of failure (SPOF), defined in Wikipedia as follows: “A single point of failure (SPOF) is a part of a system that, if it fails, will stop the entire system from working”. As a result, multiple cache servers across different data centers are recommended to avoid SPOF. Another recommended approach is to overprovision the required memory by certain percentages. This provides a buffer as the memory usage increases.

- Eviction Policy: Once the cache is full, any requests to add items to the cache might cause existing items to be removed. This is called cache eviction. Least-recently-used (LRU) is the most popular cache eviction policy. Other eviction policies, such as the Least Frequently Used (LFU) or First in First Out (FIFO), can be adopted to satisfy different use cases.

Why is Caching Essential in System Design?

- Speed and Responsiveness:

- By storing data in memory or other fast-access storage, caches reduce the time required to serve data, thereby increasing the overall speed and responsiveness of applications.

- Load Reduction:

- Caches help alleviate the load on primary data stores. This is especially important when dealing with high traffic volumes or complex queries, ensuring that the main data source is not overwhelmed.

- Cost Efficiency:

- Utilizing caching mechanisms can lead to significant cost savings. Faster data retrieval can reduce the need for expensive hardware upgrades or extensive server resources, as the cache minimizes redundant operations.

- Improved User Experience:

- In scenarios where real-time data delivery is crucial, such as web applications and online services, caching ensures that users experience minimal delays, resulting in a smoother interaction with the system.

Benefits of Caching

Caching provides several critical advantages that contribute to robust and high-performing system architectures. Key benefits include:

- Performance Improvement and Latency Reduction

- Faster Data Access:

- Caches store frequently used data in memory, which is much faster to access than disk-based storage. This results in reduced response times for user requests.

- Reduced Computation Time:

- By caching the results of complex computations or database queries, systems can avoid redundant processing, thereby lowering the processing time for repeat requests.

- Faster Data Access:

- Reduced Load on Primary Data Sources

- Offloading Work:

- When a cache successfully serves a data request, the primary data source (e.g., a database or remote server) is bypassed. This reduction in direct requests can help prevent performance bottlenecks and ensure that critical backend systems remain responsive.

- Enhanced Scalability:

- With a well-implemented caching strategy, systems can handle higher loads more gracefully. By minimizing the number of direct queries to a database, the system scales more effectively under increased traffic.

- Offloading Work:

- Cost Efficiency in Resource Utilization

- Lower Operational Costs:

- Caching can decrease the need for extensive server infrastructure. Since memory-based caches are often cheaper and more efficient than scaling out disk-based storage or computing resources, organizations can achieve significant cost savings.

- Energy and Resource Savings:

- Efficient caching mechanisms reduce the overall computational workload, which can lead to energy savings and a lower environmental footprint in large-scale data centers.

- Lower Operational Costs:

Leave a comment

Your email address will not be published. Required fields are marked *