When we talk about performance in system design, one term appears everywhere – latency.

It's one of the most important metrics that defines how fast a system responds, and it directly impacts user experience, scalability, and reliability.

What is Latency?

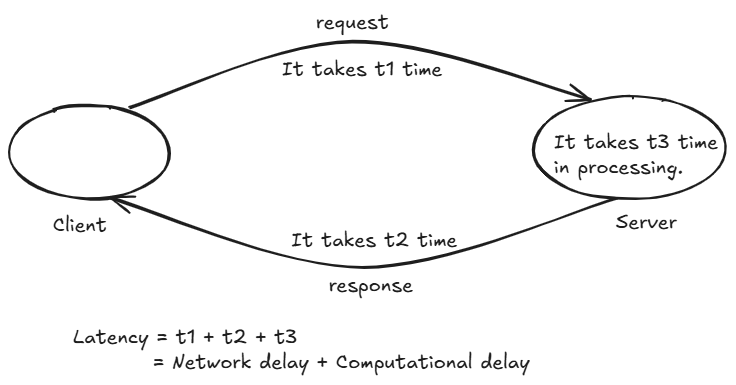

Latency is the delay between the initiation of an action and the moment its effect is observed. In the context of system design, it is the time taken for a request to travel from the client to the server, be processed, and for the response to return to the client. Lower latency typically means a more responsive system i.e., Lower latency means much better performance.

Browser (client) sends a signal to the server whenever a request is made. Servers process the request, retrieve the information and send it back to the client. So, latency is the time interval between start of a request from the client end to delivering the result back to the client by the server i.e. the round trip time between client and server.

Example:

When you click on “Buy Now” on Amazon:

- Your request goes to the backend server.

- The server processes it, checks inventory, payment, and database.

- You get a confirmation response.

If this entire round trip takes 350 milliseconds, that's your latency.

For monolithic system it is just Computational delay.

While for distributed system it is Network delay + Computational delay.

How does Latency Work?

Latency is simply the estimated amount of time a client waits after initiating a request until the result is received. Consider the following example from an e-commerce website:

- When you click the "Add to Cart" button, the latency timer starts as your browser sends a request to the server.

- The server receives and processes this request.

- Once processed, the server sends a response back to your browser, and the product is added to your cart.

If you were to measure the time from when you pressed the button to when the product appears in your cart, that duration represents the system's latency.

What Causes Latency?

Latency is influenced by several factors that can add delays to a system. For instance, when you click the "Add to Cart" button on an e-commerce site, your browser sends a request to a backend server. This server might need to call several other services—either in parallel or sequentially—to complete the transaction, and each of these outbound calls adds to the overall latency.

Key factors that contribute to latency include:

- Transmission Mediums:

The physical connection used to transmit data—such as WAN links or fiber optic cables—plays a crucial role. Each medium has its own limitations and affects the speed at which data travels between endpoints. - Propagation:

The distance between communicating nodes is significant. The farther apart these nodes are, the longer it takes for data to travel between them, thereby increasing latency. - Routers:

Routers process packets by analyzing their header information. Every hop a packet makes from one router to another adds a small delay, and multiple hops can cumulatively increase the overall latency. - Storage Delays:

The type of storage system used can also affect latency. Accessing data from storage systems—especially if the data must be processed or retrieved from slower storage types—can introduce additional delays.

Overall, these factors combine to determine how quickly a system can process and respond to a request.

How to Measure Latency ?

Latency can be measured using several techniques, depending on what aspect of the system you want to evaluate. Here are some common methods:

1 Network Tools

- Ping:

Sends ICMP echo requests to a target and measures the round-trip time (RTT) which gives an indication of network latency.

ping www.anonymous.com This ping output shows that your computer successfully sent four small data packets (32 bytes each) to the host "____" (IP address __.__.__.__) and received a reply for each one. Here’s what each part means: "Pinging anonymous.com [__.__.__.__] with 32 bytes of data:" Your computer is sending packets of 32 bytes to the specified IP address. Individual Reply Lines: For each packet, you see a reply such as: bytes=32: The reply contains 32 bytes of data. time=20ms / time=52ms: The time it took for the packet to travel to the server and back (round-trip time). Three replies were fast (20 milliseconds) and one took a bit longer (52 milliseconds). TTL=57: This stands for "Time To Live" and indicates how many hops (routers) the packet could pass through before being discarded. A lower TTL in the reply means the packet has passed through several devices. Ping Statistics: Packets: Sent = 4, Received = 4, Lost = 0 (0% loss): All four packets were successfully returned, meaning there was no packet loss. Round Trip Times: Minimum = 20ms: The fastest reply took 20 milliseconds. Maximum = 52ms: The slowest reply took 52 milliseconds. Average = 28ms: On average, it took 28 milliseconds for a packet to make the round trip.- Traceroute:

- Displays the path that packets take to reach a destination, showing the latency at each hop along the route.

2 Application-Level Metrics

- Time-to-First-Byte (TTFB):

- Measures the time from when a client sends a request until the first byte of the response is received. This metric is often available in browser developer tools or via command-line tools like

curl.

- Measures the time from when a client sends a request until the first byte of the response is received. This metric is often available in browser developer tools or via command-line tools like

- Response Time:

- Records the total time taken from sending a request to receiving the complete response. This can be logged at the server level or tracked using performance monitoring tools.

Latency Optimization

Latency optimization involves a set of strategies aimed at reducing the delay between sending a request and receiving a response. The goal is to create a faster, more responsive system. Here are some key approaches:

1 Network Improvements

- Content Delivery Networks (CDNs):

Cache static resources closer to users, reducing the physical distance data must travel. - Optimized Routing:

Use efficient routing protocols and minimize the number of hops between client and server. - Upgraded Infrastructure:

Employ high-speed connections (like fiber optics) and reduce network congestion.

2 Server and Application Enhancements

- Efficient Code and Algorithms:

Optimize code paths and choose efficient algorithms to process requests faster. - Asynchronous Processing:

Use asynchronous or non-blocking operations to prevent delays during resource-intensive tasks. - Load Balancing:

Distribute incoming requests evenly across servers to prevent any single server from becoming a bottleneck.

3 Data Access Optimization

- Caching Strategies:

Implement in-memory caches (like Redis or Memcached) to store frequently accessed data, reducing database query times. - Database Indexing and Query Optimization:

Optimize database queries and indexes to speed up data retrieval. - Data Partitioning and Replication:

Spread the load by partitioning data across multiple servers or replicating databases.

4 Client-Side Optimization

- Minimize Resource Sizes:

Compress files and optimize images to reduce download times. - Lazy Loading:

Load non-critical resources asynchronously, allowing the user interface to render faster.

Leave a comment

Your email address will not be published. Required fields are marked *